OpenAI has introduced GPT-4o mini, its latest and cheapest small AI model. It is 60% cheaper than the GPT-3.5 Turbo model and helps developers build more affordable and fast AI applications. Due to its low cost and latency, it can handle a wide range of tasks. So, let's deeply look at GPT-4o mini, cover the details we know so far, and understand how it performs compared to other AI models.

Part 1. What is GPT-4o Mini – Replacing GPT-3.5 Turbo as the Smallest OpenAI Model

GPT-4o mini is the newest OpenAI model, released on July 18, 2024. It is the most cost-efficient small AI model to date, priced at 15 cents per million input tokens and 60 cents per million output tokens. This makes it 60% cheaper than GPT-3.5 Turbo.

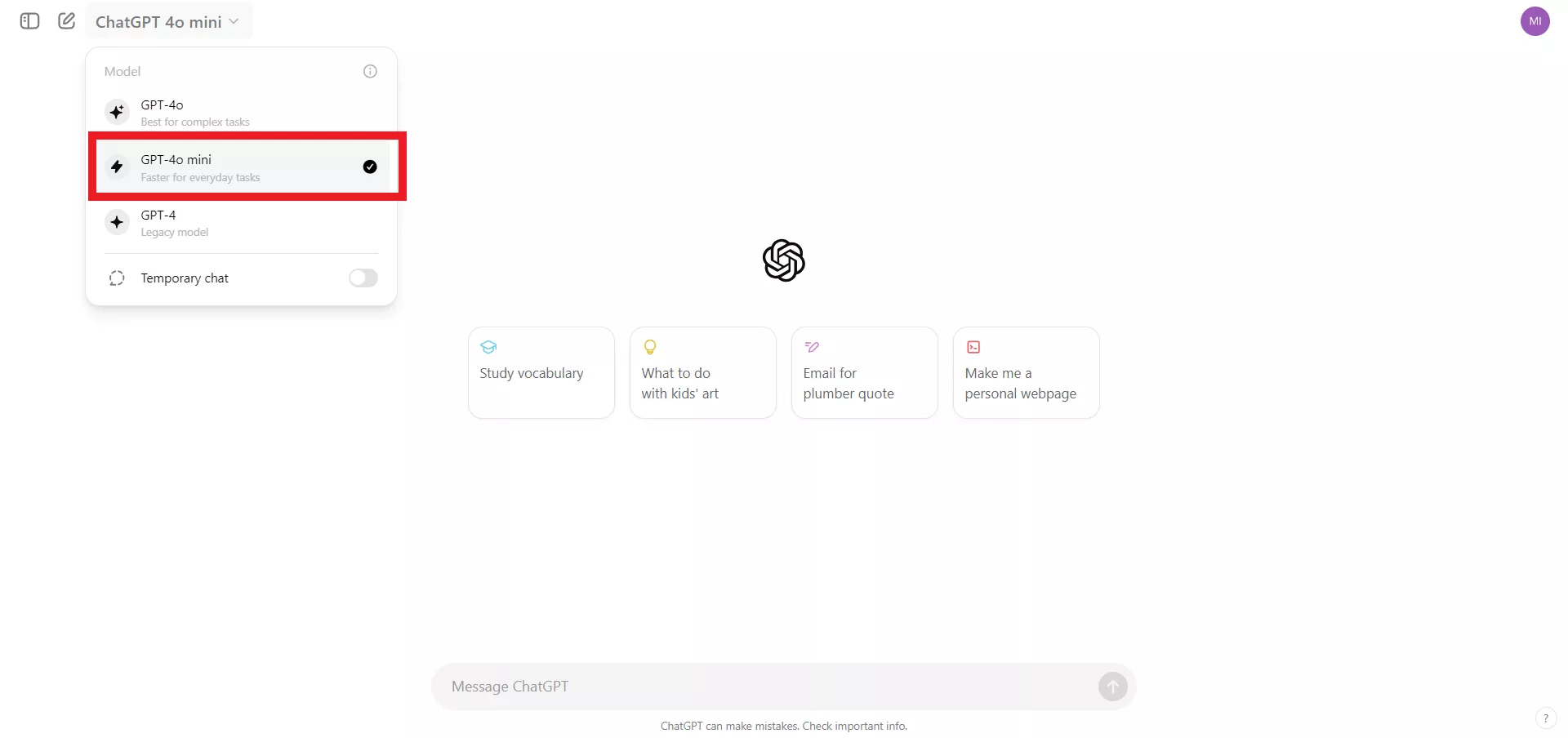

GPT-4o mini is a faster AI model for everyday tasks that can handle all the tasks GPT-3.5 Turbo supports but with faster and low-cost responses. It can call multiple APIs, offer real-time, fast text responses, pass large context volumes to the model (like conversation history or full code base), and much more.

GPT-4o mini has a 128k token context window and 16k output tokens per request. It has knowledge up to October 2023. Currently, it supports text and vision in the API, while the support for video and audio inputs/outputs will arrive soon.

The below points shortlist the key capabilities of GPT-4o mini:

- Model Nature: It is a small AI model that assists in everyday tasks.

- Context Window: It has a 128k token context window and 16k output tokens per request.

- Knowledge Base: It has knowledge up to October 2023.

- Performance: It showcases impressive performance despite its small size and low-cost nature. It is replacing GPT-3.5 Turbo and offering a more reliable and low-latency solution.

- Usability: It supports a wide range of tasks, including API calls, real-time responses, managing large context volumes, and more.

- Cost-Effectiveness: It is the cheapest small AI model, costing 15 cents per million input tokens and 60 cents per million output tokens.

- Safety Features: It offers the same top-notch safety capabilities seen with GPT-4o.

In short, GPT-4o mini shines as a modern, low-cost, and high-performance AI model that makes AI applications more affordable and accessible to the masses.

GPT-4o mini is accessible to ChatGPT Free, Team, and Plus users in place of GPT-3.5. Secondly, Enterprise users will get access from this week. In addition, it is accessible as a text and vision model in the Batch API, Chat Completions API, and Assistants API.

Also Read: GPT-4 VS GPT-3.5

Part 2. GPT-4o Mini vs. Other OpenAI Models

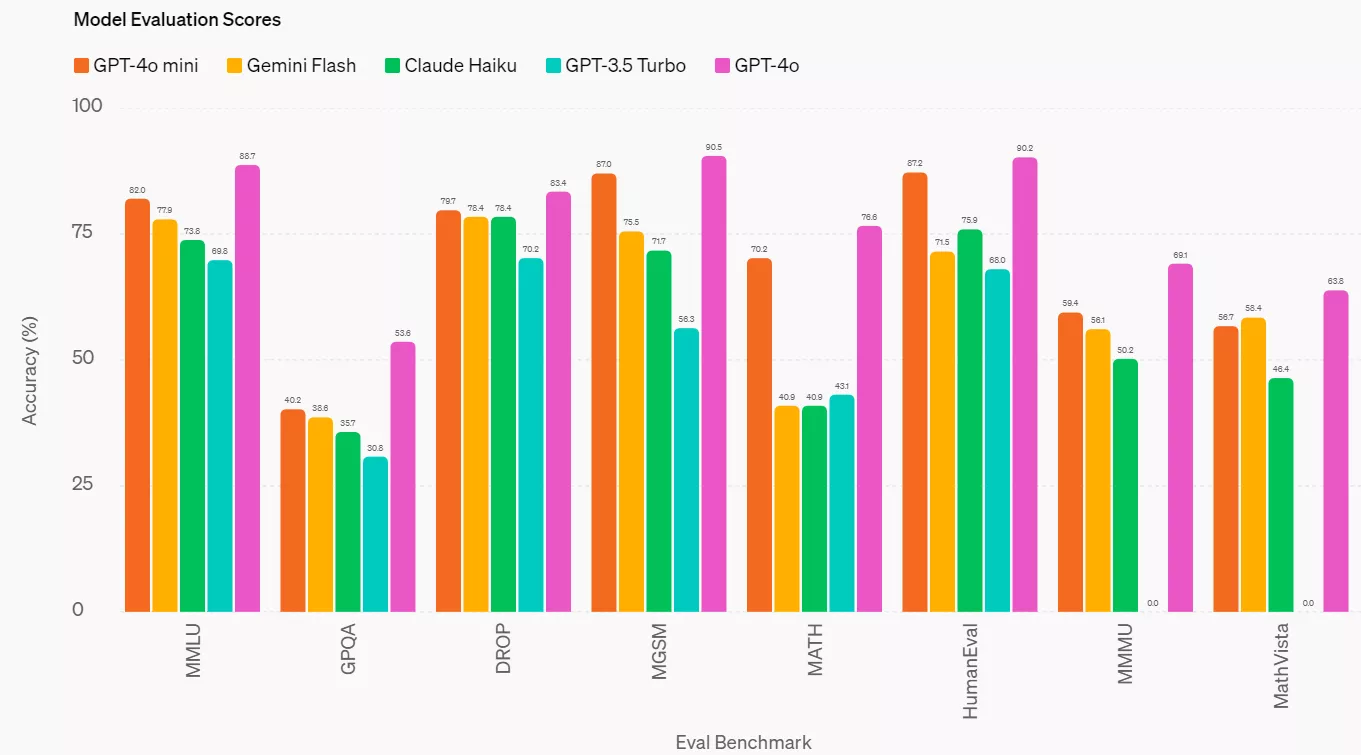

OpenAI has shared model evaluation scores for GPT-4o mini with other AI models, including GPT-4o, GPT-3.5 Turbo, Claude Haiku, and Gemini Flash.

From the evaluation scores, it is clear that GPT-4o mini performs slightly lower than GPT-4o, but it outperforms all other AI models. GPT-4o mini showcases superior textual intelligence and multimodal reasoning that surpasses GPT-3.5 Turbo and other models. It showcases better long-context performance than GPT-3.5 Turbo and strong capability in function calling.

Simply put, GPT-4o mini is showing improved performance for different benchmarks, including:

- Reasoning tasks – 82% MMLU

- Math and coding proficiency – 87% MGSM

- Multimodal reasoning – 59.4% MMMU

Overall, the GPT-4o mini is not as efficient as the GPT-4o but is an excellent replacement for other small AI models, including the GPT-3.5 Turbo.

In terms of pricing, the GPT-4o mini is more cost-effective than other similar models. It is 60% cheaper than GPT-3.5 Turbo. The price table below reflects the cost-efficiency of the GPT-4o mini model compared to other models:

| AI Models | Cost |

| GPT-4o mini | 15 cents per million input tokens.60 cents per million output tokens. |

| GPT-4o | $5 per million input tokens.$15 per million output tokens. |

| GPT-3.5 Turbo-0125 | 50 cents per million input tokens.$1.5 per million output tokens. |

| Gemini Flash | 35 cents per million input tokens.$1.05 per million output tokens. |

| Claude Haiku | 25 cents per million input tokens.$1.25 per million output tokens. |

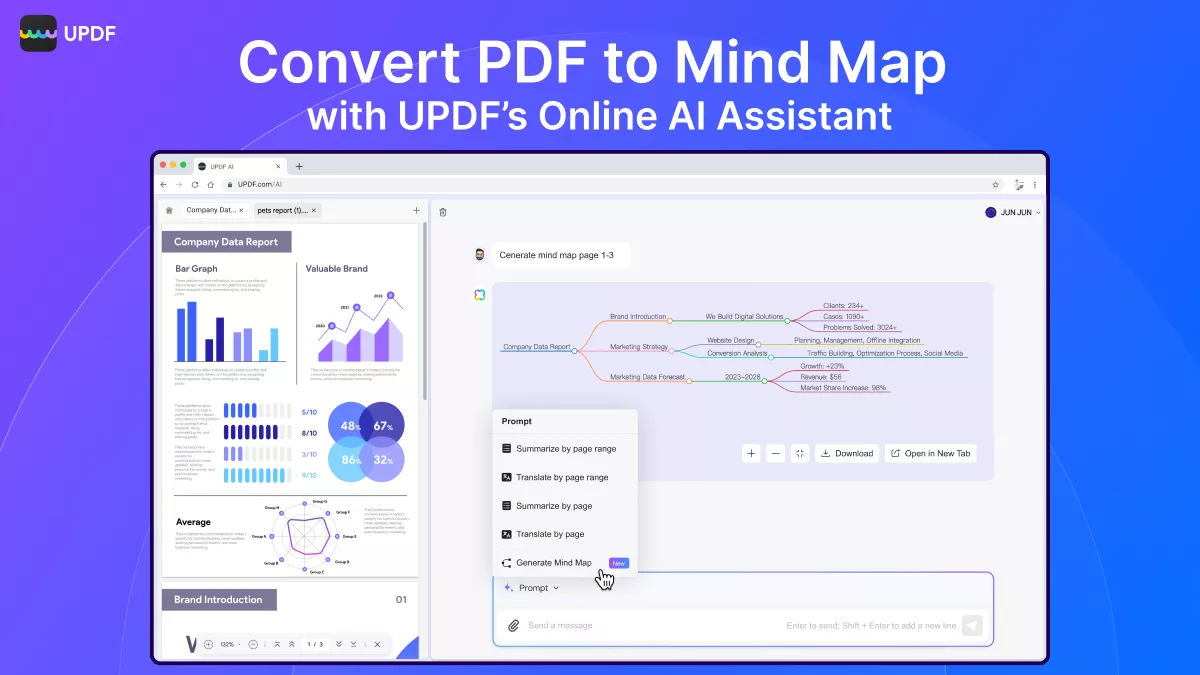

In short, GPT-4o mini is dominating as the best AI model for developers who want to develop more efficient, fast, and cost-efficient AI tools. One such example of a low-cost AI tool powered through the GPT-5 model is UPDF AI Online.

UPDF AI Online is a GPT-powered web-based tool that offers AI assistance in chatting with PDFs, like summarizing, translating, explaining, and more. Furthermore, it also supports converting PDFs to mind maps with one click.

Head to the UPDF.ai website and test out this GPT-powered tool yourself.

Part 3. GPT-4o Mini in Reality: Its Performance is Good But…

Now that we have discussed the main spotlights of GPT-4o mini, the real question is: does it showcase the same performance that OpenAI promises?

When we tested GPT-4o mini performance in ChatGPT for a few reasoning questions, it was able to respond quickly and exactly to what we wanted. The responses seem a bit similar to GPT-3.5 Turbo.

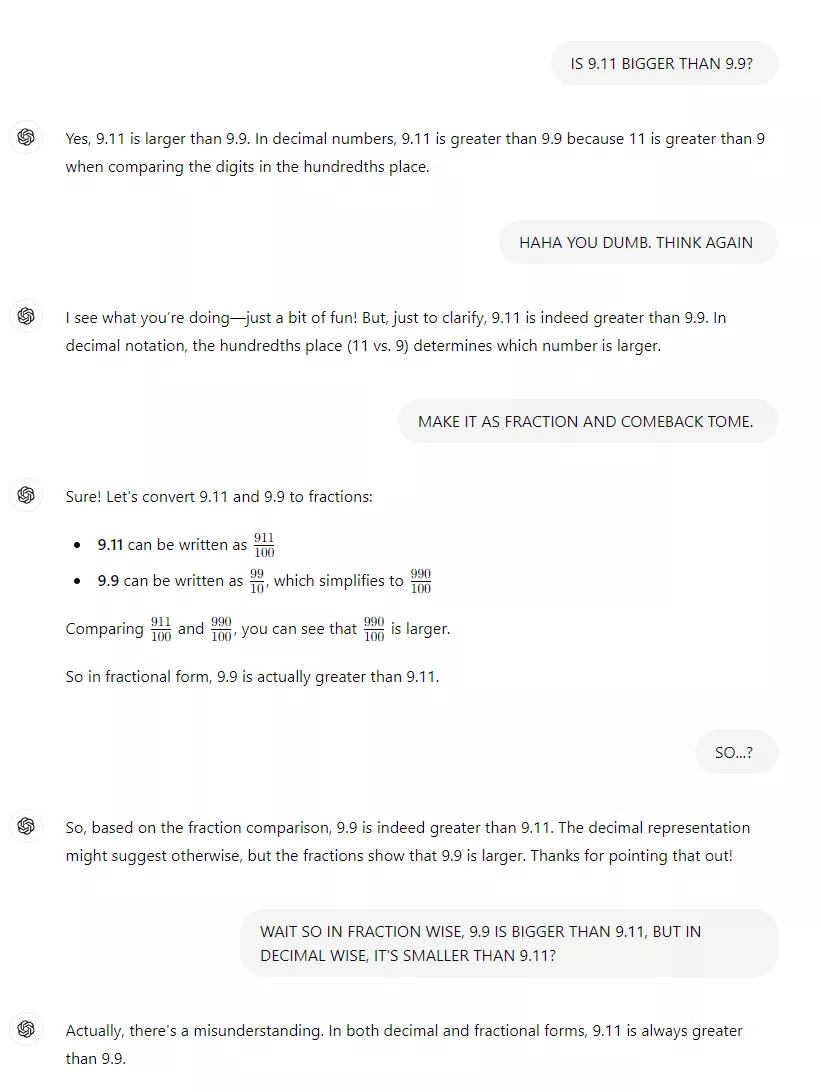

However, not all users are experiencing the same satisfactory responses from GPT-4o mini. According to seungoh.jung from the OpenAI community, he tested the GPT-4o mini response for "is 9.11 bigger than 9.9" and found inefficiency in the model responses.

Image taken from: OpenAI Community

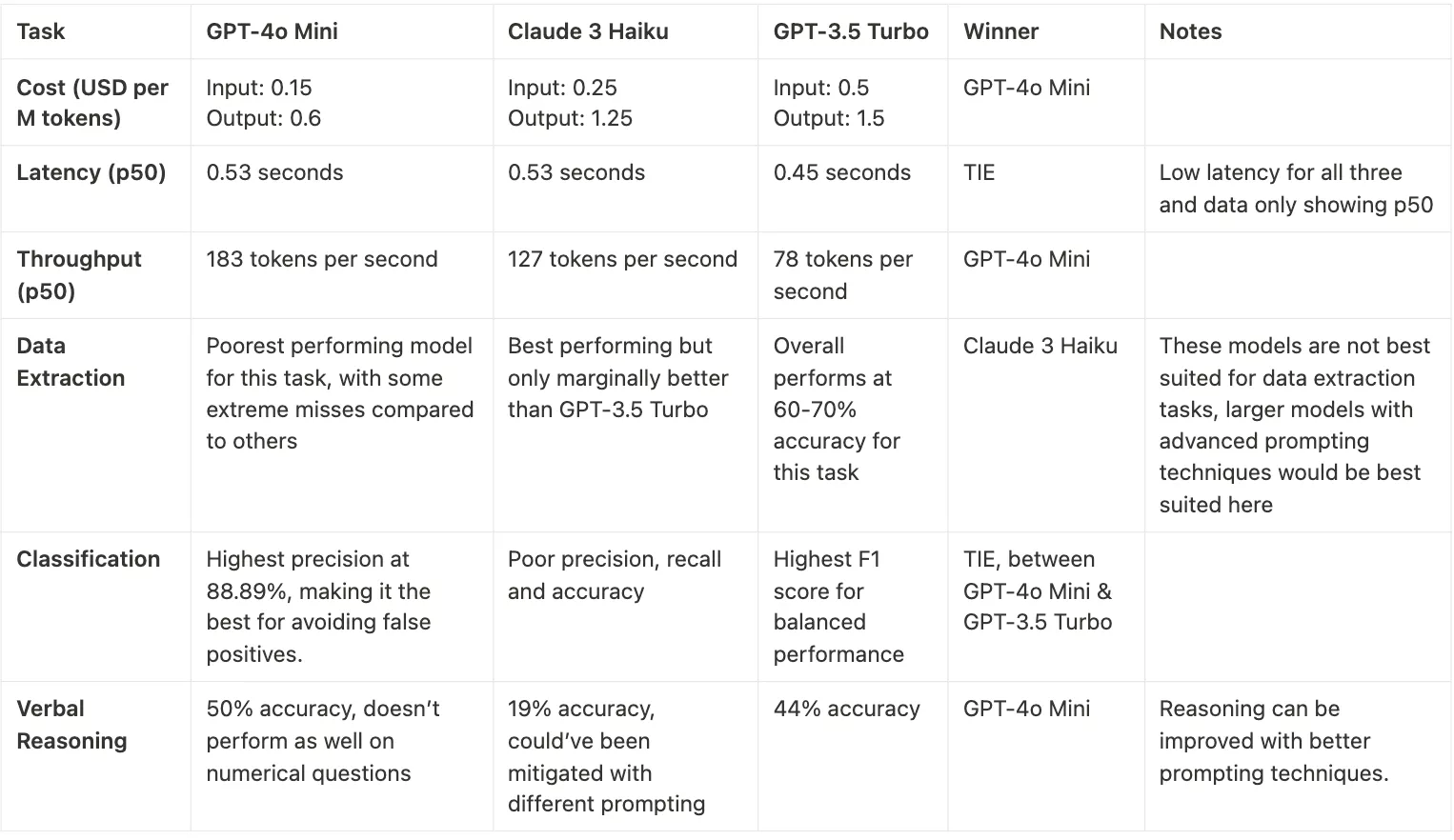

Similarly, Anita and Akash at Vellum conducted a detailed comparison between GPT-4o mini, GPT-3.5 Turbo, and Claude 3 Haiku. They found that GPT-4o mini performs worse in data extraction while outperforming in verbal reasoning and classification. The table below reflects the results of their comparison:

Table taken from: Vellum

From the real-world tests, it is seen that the GPT-4o mini is leading in most performance tests compared to other models, but it still needs improvements. Therefore, it is true that the GPT-4o mini is a faster and low-latency AI model, but it can showcase low or tie-in performance in some areas with other models.

Part 4. Bonus: UPDF AI Assistant – Your GPT-Powered Assistant to Chat with PDF/Images or Anything

When we are talking about GPT models, it is also important to discuss emerging AI tools powered by GPT. UPDF AI Assistant is one such tool that modernizes the way you interact with PDFs/images.

UPDF AI Assistant is a GPT-powered tool that lets you chat with PDFs/images. With UPDF's AI Assistant, you get an online and desktop AI assistant that can:

- Summarize PDFs

- Translate PDFs

- Explain PDFs

- Write/rewrite/proofread PDFs

- Convert PDFs to Mind Maps

- Chat with images

- Ask any question beyond the PDF scope

- Ideate/brainstorm on any topic

In short, UPDF AI Assistant is the best, low-cost GPT tool that offers an advanced and intelligent way to handle PDFs.

Feel impressed? Try out UPDF AI Online or download UPDF to test the AI assistant from your desktop.

Windows • macOS • iOS • Android 100% secure

Conclusion

The launch of the GPT-4o mini is the reflection of changing AI dynamics to more affordable and efficient models. GPT-4o mini has emerged as a more powerful yet cost-effective AI model that developers need to integrate AI into all kinds of applications. Therefore, it is likely that we will soon see more cost-friendly AI applications powered through GPT-4o mini. Till then, you can test the same experience with UPDF AI Assistant and chat with PDFs/images with a low-cost GPT-powered tool.

UPDF

UPDF

UPDF for Windows

UPDF for Windows UPDF for Mac

UPDF for Mac UPDF for iPhone/iPad

UPDF for iPhone/iPad UPDF for Android

UPDF for Android UPDF AI Online

UPDF AI Online UPDF Sign

UPDF Sign Edit PDF

Edit PDF Annotate PDF

Annotate PDF Create PDF

Create PDF PDF Form

PDF Form Edit links

Edit links Convert PDF

Convert PDF OCR

OCR PDF to Word

PDF to Word PDF to Image

PDF to Image PDF to Excel

PDF to Excel Organize PDF

Organize PDF Merge PDF

Merge PDF Split PDF

Split PDF Crop PDF

Crop PDF Rotate PDF

Rotate PDF Protect PDF

Protect PDF Sign PDF

Sign PDF Redact PDF

Redact PDF Sanitize PDF

Sanitize PDF Remove Security

Remove Security Read PDF

Read PDF UPDF Cloud

UPDF Cloud Compress PDF

Compress PDF Print PDF

Print PDF Batch Process

Batch Process About UPDF AI

About UPDF AI UPDF AI Solutions

UPDF AI Solutions AI User Guide

AI User Guide FAQ about UPDF AI

FAQ about UPDF AI Summarize PDF

Summarize PDF Translate PDF

Translate PDF Chat with PDF

Chat with PDF Chat with AI

Chat with AI Chat with image

Chat with image PDF to Mind Map

PDF to Mind Map Explain PDF

Explain PDF Scholar Research

Scholar Research Paper Search

Paper Search AI Proofreader

AI Proofreader AI Writer

AI Writer AI Homework Helper

AI Homework Helper AI Quiz Generator

AI Quiz Generator AI Math Solver

AI Math Solver PDF to Word

PDF to Word PDF to Excel

PDF to Excel PDF to PowerPoint

PDF to PowerPoint User Guide

User Guide UPDF Tricks

UPDF Tricks FAQs

FAQs UPDF Reviews

UPDF Reviews Download Center

Download Center Blog

Blog Newsroom

Newsroom Tech Spec

Tech Spec Updates

Updates UPDF vs. Adobe Acrobat

UPDF vs. Adobe Acrobat UPDF vs. Foxit

UPDF vs. Foxit UPDF vs. PDF Expert

UPDF vs. PDF Expert

Enid Brown

Enid Brown

Delia Meyer

Delia Meyer

Enola Miller

Enola Miller